Lessons Learned in monitoring and improving large scale Test Suits

We run our automated Test Smell Analyses close to 5 years now. Five years in many different projects and domains. Time to collect lessons learned and provide a historic view regarding the evolution of our automatic test analysis. But let’s start from the beginning, what are test smell analyses and why do we need them?

What are test smell analyses?

To understand what test smell analyses are, it is important to understand that quality defects in test cases can result in major problems.

Managing Natural Language Tests is difficult

Large scale test suites are often improperly structured. In addition, we often see a lot of duplicates and unnecessary test cases. These quality problems make it difficult to find the right test cases for instance when test cases have to be changed. We often find test cases which are written without common best practices too. This can lead to problems during test executions such as misinterpretation of the test description.

Introducing our Test Smells

Our test smells analysis help authors and managers tackle the following common quality problems (Since this is not the main topic of this blog post, we do not want to introduce these smells in detail. If you have further questions about some defects, don’t hesitate to contact us):

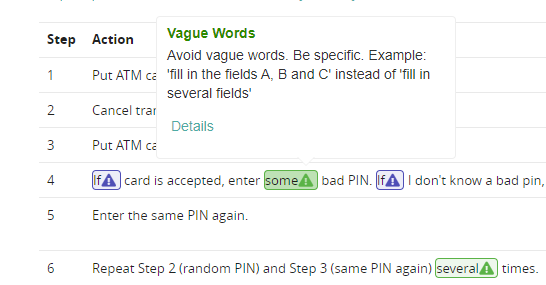

- ambiguous test descriptions (Vague Words)

- tests which are hard to execute (Branches in test Flow, Excessive Steps)

- tests which are difficult to maintain (Cloning, missing structure of test suite)

- superfluous test cases (empty, old, or duplicated test cases)

But Test Smell Analyses also help the test managers and to:

- get an overview over test suite quality (Dashboards, Reports)

- identify demands of the test team (efforts for structuring test cases accordingly)

- get notified of urgent quality problems

But how do test smell analyses work?

How do Test Smell Analyses work?

In the first step, we import all test case data from the test case management system (i.e. Azure DevOps) into Qualicen Scout. Qualicen Scout then analyses the text of the test cases and highlights quality problems such as the smells I mentioned above. Together with the highlighted textual problem areas, Scout provides a dashboard with the quality indicators and KPIs.

After one million words, our experiences

In our young history of Qualicen, we analysed well over one million words in test descriptions form different development projects and teams all over the world. What did we learn?

Lesson 1: Detecting findings alone is not enough

In the beginnings of Qualicen Scout we thought it would be enough to provide the teams with the Qualicen Scout and they will start to fix quality defects right away. But the teams came to us and most had the same question:

“How do I use the Scout?”

Using a quality tool like Scout was a new concept for most authors, hence we had to show the authors how to find and use the information Scout provides. After almost 5 years experience and iterative improvement, we have developed a highly effective introduction session of around 30 minuts to explain the Qualicen Scout.

Now that we had an introduction session, we expected the teams would start to fix quality issues but in most cases this did not happen right away.

“But where should we start? What are the most critical issues? We have a limited budget!”

Our solution: Reports which address two purposes:

- Provide a quality overview of the entire test suite similar to the dashboards in our tools but in a Powerpoint format. The Powerpoint is targeted specifically to managers who have and require a different more condensed view of the data without accessing Scout.

- Provide specific tasks, which quality issues should be addressed in the first place. For each test smell analysis, we give them tasks such as “Fix the cloned test steps in test cases TC-11 and TC-22 by introducing shared steps (specific to AzureDevOps)”

For many teams this was the perfect solution as they tried to resolve the first quality issues. Note: the “first” quality issues!

Lesson 2: Solving quality issues is not trivial

lf quality was easy to achieve we would be out of business, hence we received many question of the type:

“But how do I fix finding XYZ?”

We started with one-on-one meetings, teaching each author individually. After a few of these sessions with different teams we recognized that many questions from the teams were the same or similar.

With this knowledge, we added a new section to the introduction meetings I mentioned earlier. In the introduction meeting, we added the explanation of the different types of quality defects hightligted by Scout and how to resolve them.

For some Azure DevOps-related solutions, we also created screencasts with a step by step guide within the test management tool.

This way we increased the effectiveness of our introduction meeting and aided Teams with easy to apply solutions.

Lesson 3: The testing teams need early feedback

After solving the previously mentioned questions, we felt comfortable, that the quality process will work fine. The teams knew what to do, how to do it and used the information Scout provided. Either, to fix existing quality defects or to improve the quality of new test cases. But over a period of a few months we noticed something. The quality indicators in the Scout dashboards showed a negative trend. What happened?

Several deadlines for product releases put a lot of pressure on the testing teams, we all have seen this before right? Therefore the teams did not have enough time to focus on the quality of the test cases. Also the management was not aware of the quality problems this pressure introduced. This led to a vicious circle of many new quality issues which led to larger efforts to maintain the existing test cases which led to even more time pressure.

In addition, there were several new team members in the teams and there was never enough time to introduce Qualicen Scout to the new colleagues.

To break this vicious circle, we introduced short monthly assessments of the test suite’s quality. For simplicity we added a traffic light scale:

- Red: “new critical quality issues were introduced which need a lot of effort to resolve”

- Yellow: “some new quality issues were introduced which can be handled easily”)

- Green: “only minor small quality issues with nearly no impact on the overall quality”

Every month the teams and managers would receive this highly condensed quality overview. The monthly assessment raised awareness at management level. Resulting in testing teams getting the time they needed to fix the quality problems and break the vicious cycle.

Lesson 4: Some teams need consulting

After working with the monthly assessments for a while, most of the teams had green or at least yellow assessments. But there were still some teams which had red assessments. We approached the teams and after some pleasant conversation we figured out that they were completely aware of the quality of their test cases. But they had structural problems in the team, their process, or test management tool prevented them from writing better test cases.

In these cases Scout, Quality-Reports, and monthly assessments were not enough. We resolved those structural problems is consulting. And yes, consulting projects are expensive compared to Quality Assurance tools like Scout. Suffering the bad consequences of bad testing is way more expensive and painful, potentially dangerous too!

TL;DR:

- Introduction meetings which explain Qualicen Scout are important to enable them to use Scout and the information it provides.

- Teams need supporting material such as screencasts and slides to resolve quality problems.

- Initial Reports aids teams to focus on the most critical issues in the beginning of the development process.

- Teams and management need monthly feedback to increase awareness and actively monitor the quality of a test suite.

- Consulting is required for high level problems.