The ugly truth about automatic methods for requirements engineering quality.

Ok, after I publish this blog post, I will probably get some angry calls from my sales department... Well, truth must be told. There are many crazy defects in requirements, and, as I wrote in my last post, you can detect quite a bunch of them automatically (and you should do so!). When I present our automatic methods for natural language requirement smells or automatic methods for detecting defects in tests to our customers, I'm proud to say that they are usually very excited. Sometimes they are too excited and then this can turn into a problem. What I mean is that I explain all the amazing things that you can detect with tools and suddenly people think that the tool will solve all the problems that they face. Spoiler alert: It doesn't. And because we're a company that is interested in happy customers, I want to briefly summarize all the problems (*that come into my mind) that a tool can't solve. And because I don't want to leave you in despair, I will also suggest some solutions, how I personally would suggest to work on that problem.

Which quality defects can you automatically detect in system tests and requirements?

I am a strong advertiser of modern, automatic methods to improve our day to day life. And so I really don't want to check by hand whether my tests and requirements fit my template, or whether my sentences are readable. So quality assurance and defect detection, for example reviews or inspections, should use automation as far as possible. BUT: When I speak to clients, sometimes people get so hooked up by the idea of automatic smell detection, that I need to slow them down. Therefore, this post tries to give a rough overview: What is possible to detect automatically? The answer basically depends on two questions:

- How much syntax (or structure) do your artifacts and tests have?

- Which language do you use?

Conditionals: Why you should avoid these two letters for better test case execution

At Qualicen, it's often my job to check other people's system test cases and tell the team what I think about these tests. So what do I look for? Well, in principle it is simple: After tests are written down, they are "only" executed and maintained. So this is where tests can be bad and I try to spot things that make execution and maintenance harder. For the maintenance, the largest problem here are clones, which we covered in our last blog post. For the test execution, the main problem that you want to avoid is that different testers test different things. This is called ambiguity and comes in many tastes. In this blog post, I want to explain what is structural ambiguity and why it is bad, and this way help you to create better test cases.

(Scroll to the summary, if you don't care about the details) ;)

Ambiguous test flow

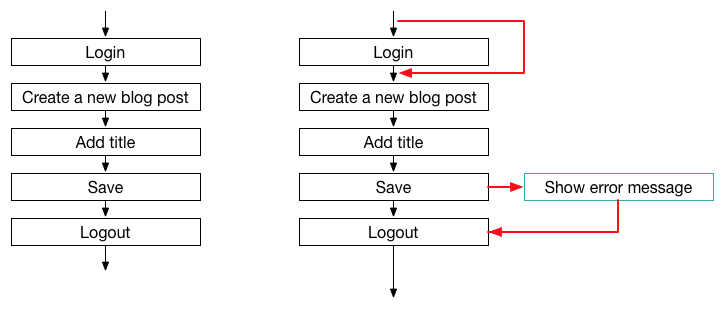

The problem for test execution that I want to discuss here, is an ambiguous test flow. This means, that for a single test case, there are multiple paths that a tester can follow when she executes the test. Let’s look at an example.

[caption id="attachment_145" align="alignleft" width="810"] A simple, straight-forward natural language system test case.[/caption]

A simple, straight-forward natural language system test case.[/caption]