Turbocharging Textsearch with NLP

Searching through a piece of text doesn’t sound like a task one would need a lot of fancy NLP technology for, but we recently had a case where this was actually necessary:

One of our customers asked us if we could help them with searching for specific terms in their documents. Their task was to deal with contracts and requirements, where missing even a small detail can potentially cost millions down the line. Additionally, these documents are usually very long, sometimes several thousands of pages. So if one where to simply Ctrl + F for a specific term one might get hundreds and hundreds of results, most of which irrelevant.

This is what made the entire case tricky. Any given term may or may not be relevant depending on the context it is in.

Drilling deeper into the problem

Let’s say for example, we had a tender laying out the details for an electric drill. This drill is supposed to be able to be powered both by batteries and cord. The tender lays out several details about how much power both inputs have to be able to provide.

Now imagine a battery manufacturer is interested in producing batteries for this drill. To make sure their products are up to spec, they search for the term “power” in order to find the expected power output. The problem they will now get, is that this term occurs multiple times in the tender: Both in the context of electrical power for the cord, as well as the battery. Additionally the tender contains information about the amount of power (i.e. force) the engine is supposed to provide. This means that our manufacturer has to dig through a huge amount of findings to get what they are actually looking for. This problem gets only more difficult since there are usually multiple synonymous terms used in such tenders, increasing the room for error.

The goal was: Minimize the number of false positives without introducing any false negatives (since these can be very costly). Our customer had already developed an inhouse solution for this problem, which allowed for more nuanced searches using regular expressions as well as checking whether certain terms do or do not occur on the same page as the potential finding. This was in an effort to try to limit the number of potential false positives. The logic was that a finding was only relevant if it occurs on the same page as another term or if a certain term wasn’t on the same page. So, for example, we could use the terms “engine”, and “cord” as a filter and eliminate all occurrences on the same page, since we likely don’t care about them.

This definitely helped with their problem but was still not good enough to limit the number of findings. Additionally, it made searching a lot more complex since if a filter was too broad one would lose important findings. If, however, the filter wasn’t broad enough it would simply not work.

Our Solution

And this is where we came into play: In order to identify false positives we developed a filtering algorithm that is able to compute the probability of any finding being a false positive, given its context. The idea is that the person searching describes the relevant context by providing a list of relevant or irrelevant keywords. These keywords are similar to the filters the customer was already using but instead of being a binary accept/reject criterion we use these keywords as an input to a fingerprinting algorithm. This algorithm is able to produce a contextual fingerprint for any given section of text.

This fingerprint consists of several indicators, for example the proximity to context keywords, but also the content of the surrounding pages, and headlines are taken into account. We then proceeded to train a Random Decision Forest to take a fingerprint and classify it as either relevant or irrelevant.

This made the overall search a lot more nuanced and powerful since it was now able to use multiple criteria with different weights. Additionally it allowed us to assign a certain relevancy score for any text section. Take this for example:

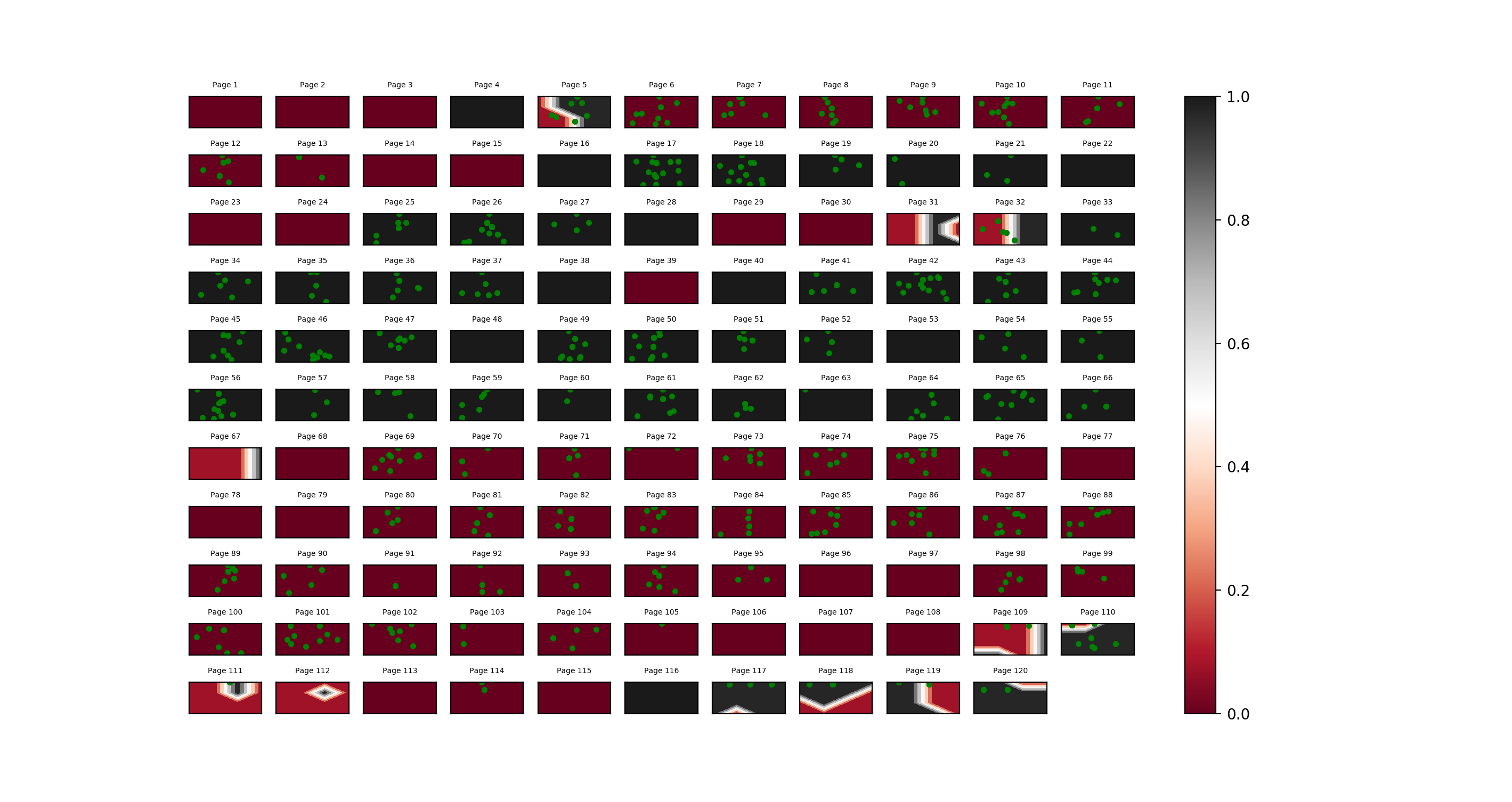

Yellow: Bread & Muffins, Blue: Soups, Green: Vegetables,

Pink: Salads, Grey: Main Courses, Turquoise: Desserts,

Red: Snacks & Drinks

Above you see the results of our rating algorithm applied to a cookbook. The tasks was simple: Find all occurrences of eggs or flour in the book. As you can guess this happens a lot in a cookbook. Every single green dot stands for one occurrence of one of the terms on a page. Next we added a context. We wanted to find every place where an egg was used in something that isn’t a dessert. So we told our algorithm that terms like ‘dessert’, ‘cake’, or ‘ice cream’ indicate less relevance. Only a couple of examples where necessary to make it work. You can see the result as the background color of the boxes in the above picture: Red indicates less relevance (In this case these would be desserts), while black indicates relevant findings.

The book started with a section on various baked goods. The first couple of which where sweet and therefore identified as deserts, it however also included recipes for bread and other savory baked goods. What makes this visualization really neat is that one can easily see pages that include both sweet and savory dishes (for example page 5) since they are marked with both colors. After baked goods came salads and main dishes. First we were confused why our algorithm thought that something in the salad section would be classified as a dessert until we looked it up and found recipes for fruit salads, which were correctly classified as sweet. Lastly came the desserts and finally a chapter on beverages and a glossary (the last couple of mixed pages).

The Results

We found that the algorithm was extremely accurate in distinguishing the two. Even when applied on topics it hasn’t seen before. Applied to the customers domain, it managed to identify around 95% of all false positive findings as such (compared to the 65% the customers previous approach was able to provide) without overly changing the number of false negatives.

So next time you have to search through a large document, maybe you can utilize natural language processing to make you life easier and your search more efficient.