Structuring system test suites – antipatterns and a best practice

Typically, you have your test suite structured in a hierarchic way to keep it organized. The way you structure your system test suite has a considerable impact on how effective and efficient you can use your tests. A good structure of a system test suite supports:

- maintaining tests when requirements change

- determine which part of your functionality has been tested, and to which degree (coverage)

- finding and reusing related tests while creating new tests

- selecting a set of test cases to execute (test plan)

- finding the root cause of a defect (debugging)

In my opinion, the first two points are the most important ones, as they touch the core of what system tests should do, namely to ensure that the system fulfills its requirements.

Of course each project is different and no matter which structure I choose, I always run into the “tyranny of the dominant decomposition” (i.e. there is no such thing as THE best way to build a hierarchy) in the end. Nevertheless, I have seen a couple of anti-patterns in the past, which only very rarely make sense.

Antipattern 1: Structure system tests by releases

How does it look like?

On the top level, you structure your system test suite by release numbers (or names). Let’s consider an imginary software for playing video and audio media (e.g. on a raspberry pi). In its first version the software can play video over HDMI and VGA output. It can also play music over an analog output. In a second version the software additionally can record videos and play audio over HDMI. Also, the play video functionality got changed. In a follow up version 2.1. the play video function got changed once more. If you structure your test suite according the releases, it looks something like this:

Each folder contains the tests that were created for new or changed functionality for this specific release.

What is the problem?

The main problem with this structure is that you will have a hard time finding and adapting tests in case requirements change. You have to figure out in which release this functionality was introduced or changed. There is a good chance that you write a new test from scratch. Another problem is that with such a structure you don’t get a good overview over which functionality is actually tested.

Antipattern 2: Structure system tests by output signal

How does it look like?

This is a pattern I regularly see in software for embedded systems. Tests are structured according to the main outputs that they relate to. The rational behind this structure is that tests that need a similar instrumentation can be executed together. In our example, we have got HDMI and VGA video outputs, and an analog audio output. The test suite in this case looks like this. Notice, that for recording I introduced the virtual ouput memory, to be consistent.

What is the problem?

As above the problem with this structuring is that you loose the relation to the functionality that is tested. Tests which trigger the same output signal may still refer to very different parts of functionality. In the extreme case triggering the signal might not even be a core part of the functionality or their may be several equally important signals. In case of the record function, for example, I had to come up with an artificial output. Maintaining such test cases and assessing the quality of the test suite in terms of requirements coverage is hard (e.g. I would have to look under “Memory” to find the test for the recording).

Antipattern 3: Structure system tests by technical modules

How does it look like?

Even though this sounds paradoxical in the first place, as we are talking about system tests, I encounter such a pattern fairly often. The only good reason to use such a structure is when either the system tests are in fact component or sub-system tests, or when there is a clear relationship between module and functionality. I sometimes find the latter in very modular systems, where each functionality is implemented as an individual plugin to the system. In our example case, there might be two main modules, a decoder and a recorder.

The test suite looks like in the following illustration.

What is the problem?

The module structure may change over the time. For example, instead of a generic decoder module, there might be separate video and audio decoder in future. Typically, the architeture is more fragile that the functional structure of the system. Soon, the structure of your test suite will not match the module structure any more (unless you re-structure your tests accordingly). Also, some functionality may be split accross modules, in this case mapping tests to functionality and requiremenst will get messy, too.

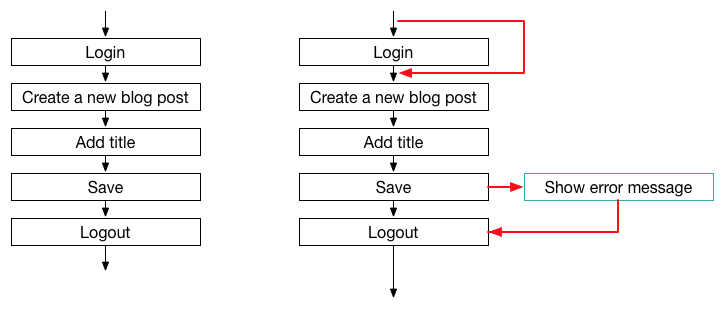

Above, I presented some anti-pattern of structuring system tests. A good way to structure systems tests in my opinion is to use the functional structure of the system.

Best-Practice: Structure system tests according to functionality and requirements

How does it look like?

On the top level you have the main functions of the system, maybe further broken down into sub-functions and indivicual requirements. In our case, we have two main functions: video handling and audio handling. The video handling function is further broken down in playback and recording. Our test suite looks like this:

Why is this good?

Using this structure, you can easily determine which tests to change or reuse in case of new or changed requirements. You can also easily determine which part of the functionality you are covering with your tests and which not.

Are there still problems?

Of course, there are. For example, sometimes there are tests that adress the interplay between different functions or test whole business processes. The first problem can often be solved by putting the tests under a common super-function of the sub-functions that should be tested. For the second problem you could add additional folders for end-to-end process tests.

Structuring system test suites is just one way of organizing your tests. If you use sophisticated tools for test management, you have plenty of more possiblities, such as setting trace links between requirements and tests. However, starting with a sensible structure is still a good first step.

Do you structure your system tests in a different way? Let me know how and why!