The ugly truth about automatic methods for requirements engineering quality.

Ok, after I publish this blog post, I will probably get some angry calls from my sales department… Well, truth must be told.

There are many crazy defects in requirements, and, as I wrote in my last post, you can detect quite a bunch of them automatically (and you should do so!). When I present our automatic methods for natural language requirement smells or automatic methods for detecting defects in tests to our customers, I’m proud to say that they are usually very excited. Sometimes they are too excited and then this can turn into a problem.

What I mean is that I explain all the amazing things that you can detect with tools and suddenly people think that the tool will solve all the problems that they face. Spoiler alert: It doesn’t. And because we’re a company that is interested in happy customers, I want to briefly summarize all the problems (*that come into my mind) that a tool can’t solve. And because I don’t want to leave you in despair, I will also suggest some solutions, how I personally would suggest to work on that problem.

Did I forget something in the list? Let me know via mail, twitter (@henningfemmer) or in the comments.

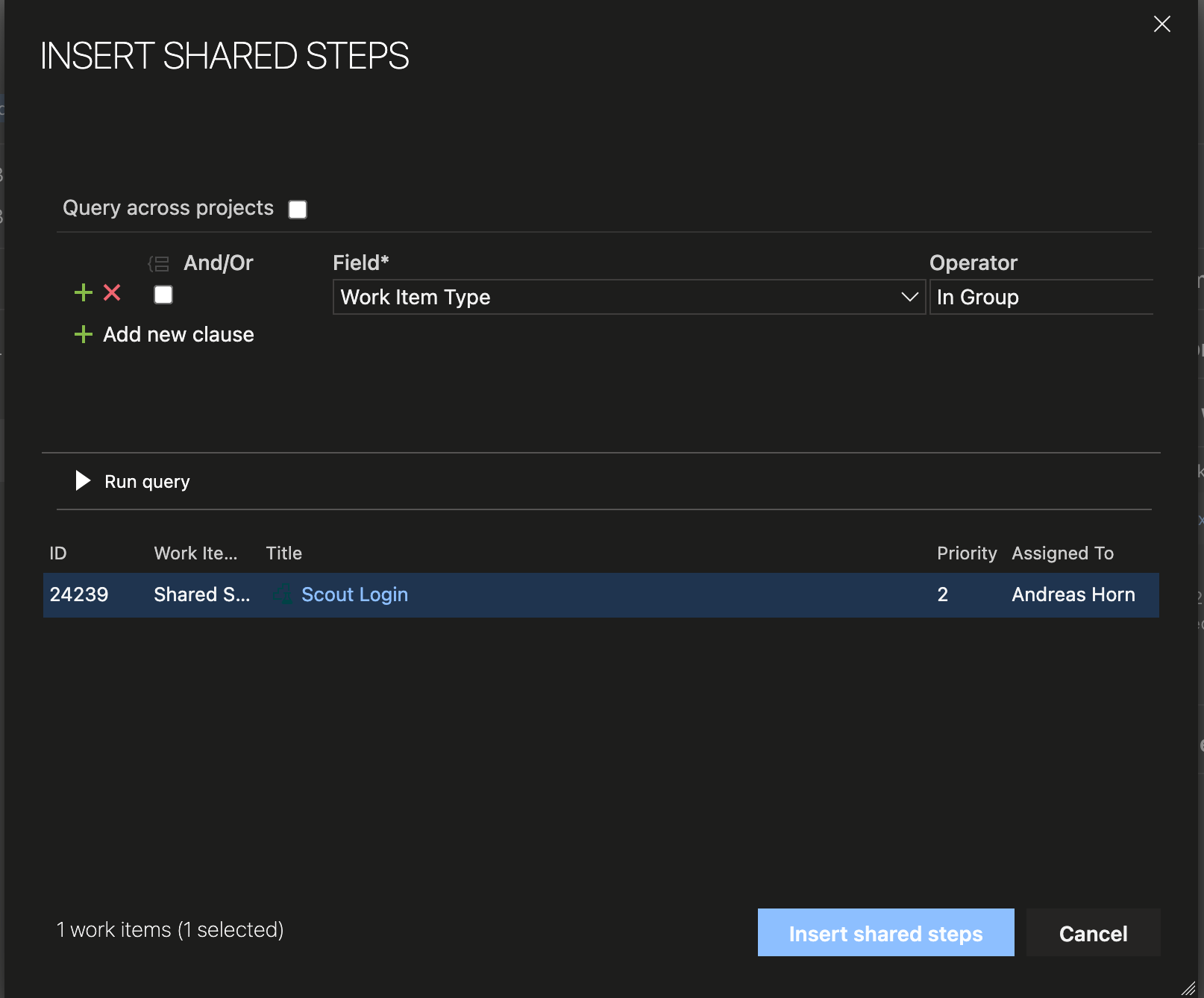

Now, we studied this question in depth in a paper earlier this year. What we did is checking pages and pages of review comments and trying to understand which review comments can and which cannot be automatically detected (check our paper for details). You can find the detailed result in the figure underneath. Let me summarize for you what we found in these four core problems, why you cannot detect a certain defect automatically.

Which defects cannot be detected, taken from H. Femmer, D. Méndez Fernández, S. Wagner, and S. Eder, “Rapid quality assurance with Requirements Smells,” J. Syst. Softw., 2015.

1. You want a tool to detect something that you cannot really define

Quite a few comments in the reviews that we looked at, said something like: “I don’t really understand this paragraph.” or “The diagram is not neat.” Why is it impossible to detect these issues automatically? Now, a good starting point for understanding what you can and what you cannot detect is asking yourself: Can I precisely define what I search for? In the above case, you do not know which quality factor, which aspect, is really the problem. And of course, if you don’t know it, there is no way to tell a computer what to look for.

Solution: Look deeper. Try to look at multiple similar problems. Ask your colleagues.

Alternative Solution: Wait until machine learning solves this problem. Just be aware that this may take a few years… 😉

2. You want a tool to detect something that needs in-depth text understanding

A good example of defects that fall into this category, are conflicting requirements. If you specify in one requirement that “the traffic light should be green for 30 seconds”, and in another requirements that “the green, red, and yellow cycle should in total take less than 25 seconds”, it is very difficult for NLP to understand that we have a conflict here.

Although Natural Language Processing (NLP) has made tremendous steps forward. In my last post, I discussed a few ways to detect issues in requirements and tests by using NLP. However, there is so much left! In a great article, Erik Cambria and Bebo White, discuss how we’re still struggling towards syntax (basically understanding the grammar, as I described in the last blog post). The step that we’re getting better at, is semantics. Semantics is basically understanding what a sentence really means, which needs background knowledge. Companies more and more apply ontologies to make this work, but they are very far from perfect yet. The last curve in Cambria’s and White’s discussion is pragmatics. Pragmatics would enable to understand not just a single sentence, but a complete paragraph or even document. What makes pragmatics so difficult is understanding how terms are related. So, we not just need perfect understanding of grammar, and background knowledge, but also the relations between each paragraph. So, for example, in one paragraph you speak about Uncle Bob, in the next paragraph just about “your Uncle” or “Bob”, so the human mind immediately understands that these refer to the same concepts. The computer is far away from that point yet.

![NLP Curves, from [1] E. Cambria and B. White, “Jumping NLP Curves: A Review of Natural Language Processing Research,” IEEE Comput. Intell. Mag., vol. 9, no. 2, pp. 48–57, 2014.](https://www.qualicen.de/blog/wp-content/uploads/2016/09/Screen-Shot-2016-09-22-at-11.32.33.png)

NLP Curves, from [1] E. Cambria and B. White, “Jumping NLP Curves: A Review of Natural Language Processing Research,” IEEE Comput. Intell. Mag., vol. 9, no. 2, pp. 48–57, 2014.

3. You want a tool to detect something that needs information from the domain

Some defects in requirements are defects, because they stand in conflict with the real world. This can be because the requirement is invalid (does not correctly reflect stakeholder needs) or because the requirement is infeasible. Some of this is simple, like just a definition of terms, such as a glossary. Other aspects are more complex. For example, a customer once shared the requirements of a project in which a certain component was required to be relocatable to other sites of the company. Until the (“proper”) engineer burst out laughing. The “component” was a huge, heavy-weight structure that would require the police to close the highway for traffic…

And for some of these problems, researchers try to use ontologies to find these problems. But so far, they have not yet reached sufficient quality to help in practice.

Solution: I would argue that these kind of defects are the core business of manual analysis and the human being. This is really where engineers and domain experts are great in. This is what should be the focus of the review.

4. You want a tool to detect something that is outside of what you analyze

So, this point is quite simple. For example, if you look at requirements, it will be very hard to detect, which requirements are not there. In addition, you will struggle to systematically find defects that relate to:

- You use a certain format (such as user stories or use cases) but another format would be better.

- You use a certain tool which makes it really difficult for you to work with the requirements.

- You apply a certain process to create your documents, but it’s the wrong process.

- Your team has issues and doesn’t work well together.

- Your customer or management sucks.

Now, I’m sure all of these aspects are very visible in the requirements. Basically if your team sucks, the requirement will suck, too… But the leverage is not within the requirements, but outside.

Solution: Get neutral outsiders on board. This can be from another team, department or even company. Just make sure that they are really neutral and do not have a hidden agenda.

So what?

So, if a customer asks me, “Can you automatically detect this defect?”, in my mind, I basically walk through the above list and see if anything fits. And yes, very often the answer is “Sorry, that is not possible.” But in all other cases, there is relief. Think about small aspects or smells of the problems that you actually can find. And for the other problems, rethink your RE processes, talk to the folks that have to read your requirements documents, and wade through the masses of requirements management tools until you find the tool that fits to your process. Very often, there are simple solutions that solve a great deal of the complex problem.

But finally, the ugly truth is: There will still be manual reviews. There are plenty of defects in requirements documents that cannot be detected automatically, for the reasons I explained above. However, don’t uninstall your automatic tools already!

Automatic tools enable to separate the difficult from the stupid tasks. This can make things faster and better:

- Faster: With automatic tools, manual reviews can focus on the key, difficult problems, so that reviewers don’t waste time on simple issues, such as complicated language. And these benefits multiply. As Frank Rabeler points out in his great article, requirements are read at least 30-50 times! Think about how much time you could save here.

- Better: Ambiguities and smaller problems have unpredictable consequences. You never now, whether the readers understand the text correctly. Additionally, when you solved the simple problems automatically up-front, they do not distract the reviewers from the difficult issues. This way reviews become more effective and find more relevant defects.

It will be interesting to see how far automatic tools can push these barriers in the future.

Sorry, the comment form is closed at this time.

Pingback: Efficiently control requirements quality: the best of two worlds – Qualicen

30/03/2020