Which quality defects can you automatically detect in system tests and requirements?

I am a strong advertiser of modern, automatic methods to improve our day to day life. And so I really don’t want to check by hand whether my tests and requirements fit my template, or whether my sentences are readable. So quality assurance and defect detection, for example reviews or inspections, should use automation as far as possible.

BUT: When I speak to clients, sometimes people get so hooked up by the idea of automatic smell detection, that I need to slow them down. Therefore, this post tries to give a rough overview: What is possible to detect automatically?

The answer basically depends on two questions:

- How much syntax (or structure) do your artifacts and tests have?

- Which language do you use?

In this post I will refer to requirements artifacts here and there, but the answers are pretty much the same for both system tests and requirements.

How’s your syntax?

To answer what’s possible and what not, we first have to look at the syntax that we use. Syntax pretty much defines the basic building blocks and structure of our requirements. And when you look at it, there are thousands of different possibilities for syntax in requirements. For example free text, sentence patterns, user stories, use case templates, structured use cases in UML, state machines, etc. In General, it’s a simple rule: The more precise your syntax (along of course with a precise meaning, the semantics), the more you can do with automatic methods. But let’s look at this in more detail. For requirements, we differentiate four groups, extending Luisa Mich and other’s structure:

- Common natural language: If you describe a requirements with a couple of sentences in plain German or English, you are writing down requirements in common natural language. To give you an example, underneath is a small excerpt of the requirements specification for a Pacemaker. The only tiny bit of syntax here is that each requirements sentence contains the word shall. Although there is not much structure, we can still find defects, related to how you use natural language. Because: Not everything that is perfect English is also perfect English for requirements. For example, it is common knowledge in linguistics that passive voice makes texts harder to understand. So, automatic tools help requirements authors by pointing out these problematic passives. Here, natural language processing (NLP) comes into play, and I will explain a little later what can be detected with NLP.

- Language based on sentence patterns: A slightly more structured way to write requirements is to use sentence patterns. To give you an example, together with Jonas Eckhardt and other colleagues from TUM, we developed patterns for performance requirements. Have a look at them in the figure underneath. If we know that requirements are written down with such a pattern, we can check, for example, which events, operations or components of the system are named and conduct analyses on this information. A second example are user stories. User stories usually follow the pattern As a [Role], I want [Function], so that [Goal]. To give you an idea what we can automatically detect, is that for user stories it is really simple to automatically ensure that all user stories have a [Goal] part in the text.

Sentence patterns as proposed by Jonas Eckhardt and others, figure taken from the paper

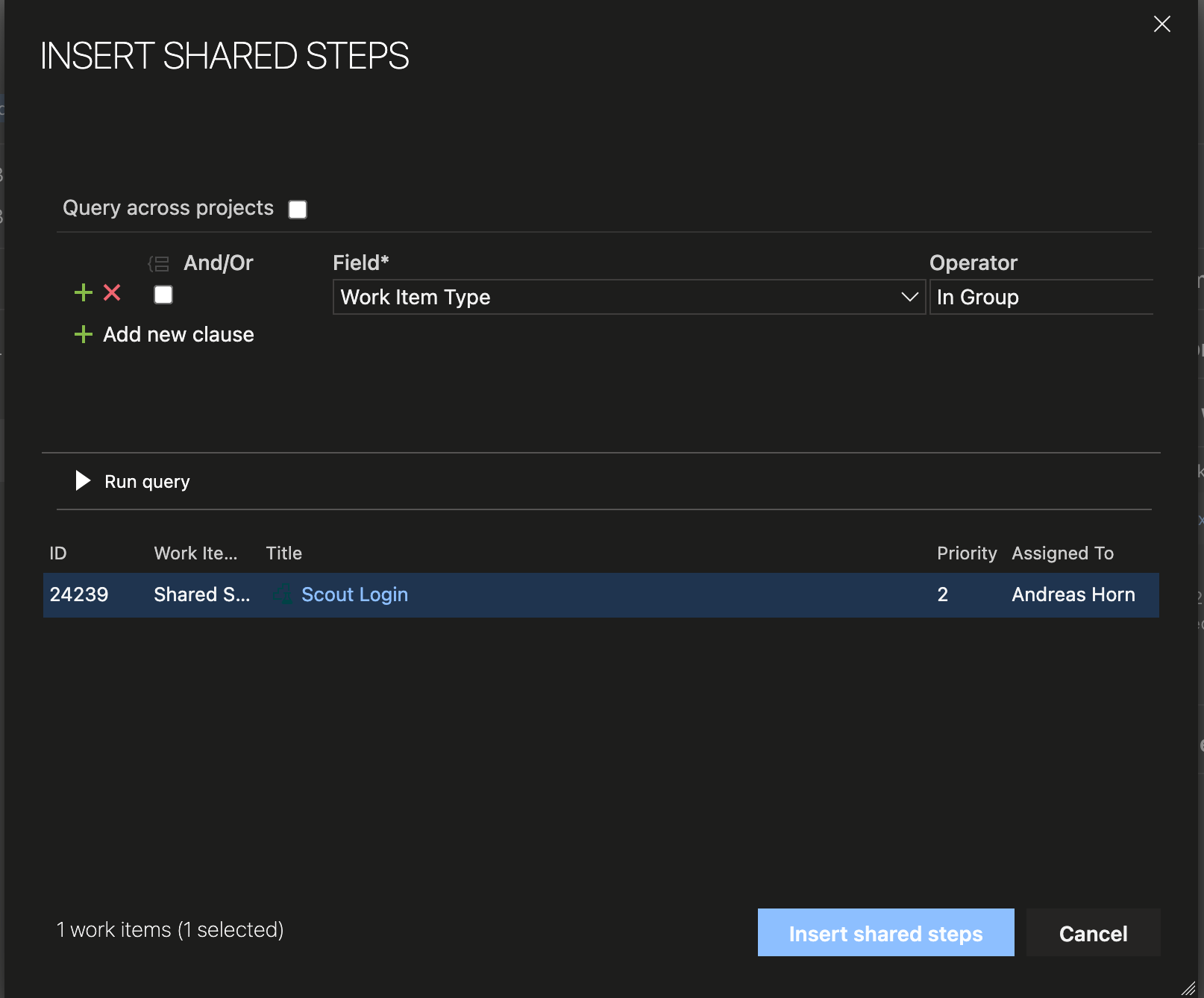

- Document structure based on templates: Many projects write down their requirements by first taking an existing document template with various pre-defined headlines, and start filling the content underneath the headlines. Usually that means you apply any standard structure. One example are use case templates. For example, check out how Munich Re can automatically detect bad use case flows or incorrectly defined references in their use case template: They define extension points in curly brackets ‘{‘ Extension Point Name ‘}’, so that in the whole document you can jump back to this extension point. Now, if an automatic detection knows of this syntactical element, we can easily detect if an extension point is undefined, unused or even defined multiple times.

Most requirements management tools also come with templates of these sorts.

Extension points are used, but were not defined in a use case template (taken from our paper)

- Formalized language: To the extreme, in state machines (see example underneath), everything has not only a defined syntax, but also a very strict semantic (meaning).Consequently, in such a strong syntax, automation is on full throttle. With techniques like model checking you can detect whether certain properties of your requirements hold. But of course, this demands extreme efforts for the input, and your models still have to be correct to be of any help. And it’s really debatable, how well such a diagram enables you to communicate requirements to non-IT stakeholders. So, if you want to write down requirements to specify and not communicate, this is a good way to go.

![Transition diagram for a finite state machine modeling a turnstile, a common introductory example used to explain state machines. The turnstile has two states, Locked and Unlocked. There are two inputs effecting the state: depositing a coin in the turnstile, coin, and pushing on the turnstile's bar, push. The turnstile bar is initially locked, and pushing on it has no effect. Putting a coin in changes the state to Unlocked unlocking the bar so it can turn. A customer pushing through the turnstile changes the state back to Locked so additional customers can't get through until another coin is deposited. The arrow into the Locked node from the black dot indicates it is the initial state. By Chetvorno [CC0], via Wikimedia Commons](https://www.qualicen.de/blog/wp-content/uploads/2016/08/500px-Turnstile_state_machine_colored.svg_.png)

Transition diagram for a finite state machine modeling a turnstile, a common introductory example used to explain state machines. The turnstile has two states, Locked and Unlocked. There are two inputs effecting the state: depositing a coin in the turnstile (Coin), and pushing on the turnstile’s bar (Push). The turnstile bar is initially locked, and pushing on it has no effect. Putting a coin in changes the state to Unlocked. A customer pushing through the turnstile changes the state back to Locked so additional customers can’t get through until another coin is deposited. The arrow into the Locked node from the black dot indicates it is the initial state.

By Chetvorno [CC0], via Wikimedia Commons

Of these, by far most companies write down their requirements in natural or structured natural language using patterns or templates. So basically, most of the requirements are based on some form of natural language. So how much does a computer understand when we feed it with natural language text?

Let’s process some natural language!

Disclaimer: Our tools analyze German and English texts, so this is what I know about. If things are different in other languages, let me know!

Many quality issues in requirements and tests origin from how you use natural language. For example, you use passive voice where you could use active voice. Or you use a pronoun, and it is unclear which word this pronoun references to. See the example underneath: Who must communicate? The software? The services? Or the applications?

To detect these kind of problems, we must analyze the language that we use in natural language texts. Here, NLP comes into play. NLP takes a string of characters as an input and extracts various types of information, mostly building upon each other. In the following, I’ll give you a brief overview, based on DKPro and Stanford NLP, which I think are the most versatile tools on the market.

For most of these examples check out the online demos corenlp.run of Stanford and displaCy from the Berlin based spacy.io team. It’s a amazing see how computers understand our grammar. Let’s look at some of the NLP components in more depth:

- Tokenization: First step is trying to understand the word boundaries. Why should that be difficult, just look for a space or punctuation. But as always: The devil is in the details. For a machine, you’re sending just a stream of characters. The tokenization has to deal with contractions (it’s) and abbreviations (N.W.A.) and builds the foundation for the following steps.

- Sentence splitting: Next, from a stream of tokens, we try to understand where a sentence ends and a new sentence starts. Again, you say that is simple? Not completely, since periods may also appear in the middle of the sentence (e.g., etc., abbreviations, enumerations and so forth).

- POS tagging: Now, we’re going into linguistics. We try to understand the role of the words in this sentence (or part-of-speech tag), such as verb or noun. This is today usually achieved with sophisticated machine learning techniques. For English, the most used tagset is the Penn Treebank II tagset, you can check out what the tags mean on cheat sheets, such as this one.

- Morphology: Sometimes included into the POS tag, the morphology goes usually deeper. Check out, for example, the morphological features taken into account in the DKPro type system. For each word, we can distinguish various features. However, the accuracy and libraries available for this, varies, unfortunately.

- Syntactic parsing (structure): Now that we know all the details about each word, parsing tries to build a hierarchical, grammatical structure from these blocks. The developers of Google’s Parsey McParseface (yes, that’s the real name) explain how that works in depth in their blog and it’s a great read. But at the core, you afterwards end up understanding the main and conditional clauses, the main verb of a sentence, and you now have adjectives and nouns clustered together.

- Syntactic parsing (dependencies): Next, the parser tries to understand dependencies between the various words. For example, the following image shows the dependencies of the previous example, as rendered by displaCy. With this, the automation knows that ‘software‘ is the subject of ‘implement‘ and that the ‘services‘ are the object to this. So, this enables us to structurally understand the core of the sentence. Pretty awesome, eh?

- Others: This is just the beginning. From here we can add more outside information and start trying to understand the semantics of the sentence. Also, from a more syntactic perspective, we can understand what a certain verb probably sounds like (Phonetics), and we can search for references between words (Coreference Resolution). Finally, but this is a whole world by itself, we can try to understand Pragmatics, which is basically understanding a whole paragraph in context.

So, how does this help? Well, for example, from the POS tag, we know that ‘which’ is a pronoun, and thus there should be a word to which it refers. But, when we look at the parse tree, at the potential candidates for reference, we see that there are multiple nouns that are potential references. So, your brain now starts working. It considers the semantics of the words: Could software, could services, and could applications do ‘communicate‘? Would it make sense? As an advertiser for readable requirements, I would say get that load of the brain of your readers, and just make it simple straight away.

To sum it up…

So, depending how you write down your requirements, there is more or less that one can automatically detect. For this, there are basically two aspects: In which form do you write down your requirements? Do you have templates? Do you have sentences patterns? Good, because the more syntax, the more is possible.

Next is the language that you use. NLP has plenty of options to syntactically understand a sentence. More and more stuff is getting possible every day, and very likely whatever I wrote here will be outdated soon. Plus, in all of these, languages, tagsets, libraries and accuracies vary. In consequence, what’s simple in one language, may be hard in another one. If you’re interested in a particular aspect, or if I missed something important, let’s discuss in the comments, via twitter (@henningfemmer) or via mail.

Now go and play with the Stanford Parser, Stanford Core NLP, displacy, and DKPro! 🙂